(music + technology) (old + new)

blog formerly https://spookyandthemetronome.wordpress.com/

Upcoming book: The Museum of Imaginary Musical Instruments

As a reader of this blog, you may be familiar with the online Museum of Imaginary Musical Instruments – a site Thomas Patteson and I launched in 2013 to showcase imaginary musical instruments we came across in our research.

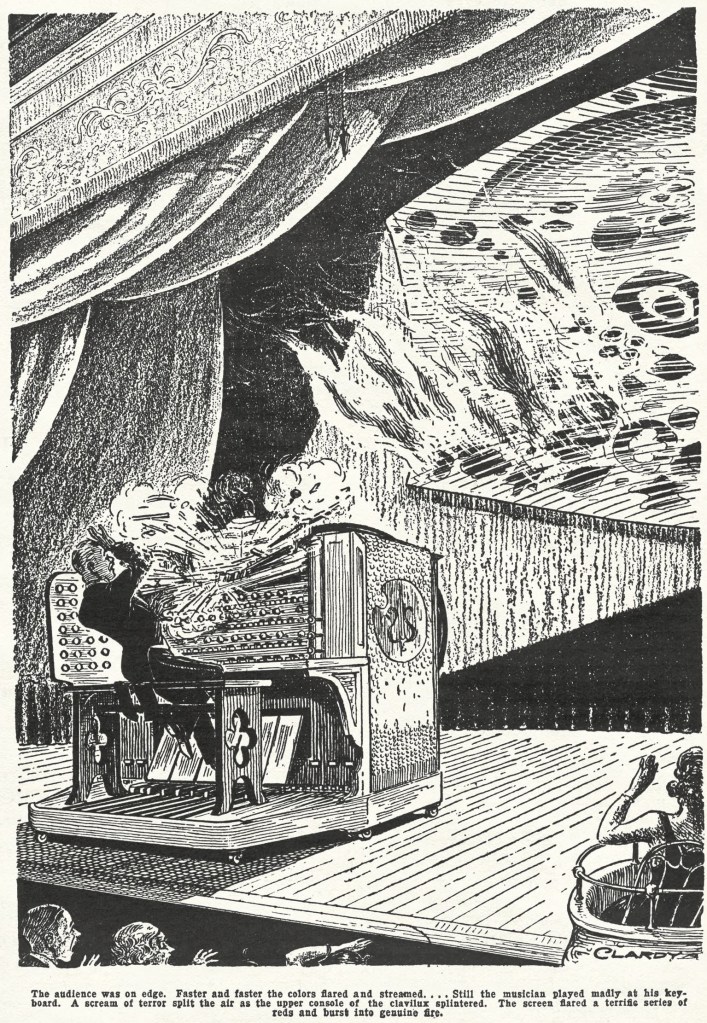

Now, it’s becoming a book! And it turns out the world of imaginary musical instruments is even bigger, weirder and more wonderful than we knew. With over 80 images, the book includes some classics from the online museum (like the cat piano and steam concert) and many new gems. We organized these into a guided tour that offers both a cultural history and a study of creative thought, and reveals changing ideas about sound, invention, and the limits of the possible.

The Museum of Imaginary Musical Instruments will be published by Reaktion in 2026, available in April in the UK and July in the US. We hope it sparks something of the joy and wonder we experienced in gathering these remarkable artifacts.

New Book: Sounding Human

My new book, Sounding Human: Music and Machines, 1740/2020, is out now from University of Chicago Press. Much of the music discussed in the book is available on the accompanying Spotify playlist. Read on for a (tweet-per-track) tour through the playlist and some audio/video examples not available on Spotify!

Introduction

Track 1 Mozart, Fantasie in F Minor K. 608 (1791) illustrates a contrast between modern and 18th-century intuitions about music & machines. It was composed for a mechanical organ clock, and as musicologist Annette Richards pointed out, 20th-century critics dismissed the machine as cheapening of Mozart’s music. In its initial reception, the music & its automated performance went together for a heightened awe-inspiring display (one witness described “a thousand varying emotions”). Listen from 8:05 for the increasingly dense and dramatic fugue

Track 2 Kraftwerk, “The Robots” (1978) features the use of a vocoder to turn the voice – and human speaker – robotic. Florian Schneider described their music “expos[ing] the mechanical and robotic attitude of our civilization”

Track 3 Afrika Bambaataa & The Soulsonic Force, “Looking for the Perfect Beat” (1983) illustrates, in words of Tricia Rose, an “already having been robots” for Black people under slavery/capitalism and resistive taking control of the robot guise. Listen at 5:50 “we are the future, you are the past”

Wendy Carlos, synthesized finale from Beethoven’s Ninth Symphony (1971). The vocoded “Ode to Joy” is commonly heard as voices drained of humanity, but for Carlos and collaborator Rachel Elkind it was the sound of synthesizer gaining it by singing. Listen, for example, at 5:55

Track 4 Nona Hendryx, “Transformation” (1983) another alternative to vocoder = robot, Hendryx’s use can be heard to sound the voice of mother nature, dissolving natural versus tech/artificial. Listen ex. at 1:58 in the context of the song

Track 5 From Giovanni Battista Pergolesi, La serva padrona (1730s), the main characters mimic a clock bell and bass drum as the sounds of their hearts/love, to which they direct each other to listen, ex. at :51-1:10

Tracks 6 & 7 Queen of the Night aria from Mozart, Magic Flute (1791) & android Olympia’s aria from Offenbach, Tales of Hoffmann (1881) illustrate leaping vocal virtuosity that became sounding inhuman/like a machine. Listen @ :45 on Track 6 & 1:25 on Track 7

Trk 8 From Mozart’s Magic Flute (1791) at :38 – in contrast to the clockbell of a loving heart in La Serva Padrona (trk 5), bells here make slaves dance automatically, conflating the mechanical and Blackness in a “racist technopoetics” (Chude Sokei’s term)

Chapter 1 Becoming Android: Reinterpreting the Automaton Flute Player

Track 9 From opera Pirame & Thisbé (1726), the melody at :43-1:40 was sung in the play L’Oracle (1740) by a character pretending to be an automaton that can only “parrot…at random,” raising questions about being moved by a machine & being human through its singing

Chapter 2 Hybrids: Voice & Resonance

Tracks 10 & 11 Last Magic Flute example: classic interpretations of these tracks illustrate modern hearing of human versus mechanical sound in voice versus bells

Track 12 Alessandro Striggio “Chi fara fede al Cielo,” appears as hydraulic organ music in a 1615 treatise on machines by Salomon de Caus, and exemplifies what might have sounded as Orpheus’s mythic music in the mechanical grotto featuring Orpheus playing a viola da gamba

Track 13 This Prelude for viola da gamba by Marin Marais features letting strings ring, a technique criticized for “stand[ing] in the way” of melody. Compare beginning (continuous melody) to ~1:35-2:00 (technique used to let notes ring while playing others)

Track 14 This viola da gamba piece by Marin Marais imitates the style of a plucked lute, and captures a perception of lute sound quickly decaying – “tic tac” – rather than ringing out

Track 15 In contrast to the lute-imitative piece, this viola da gamba piece by Marin Marais imitates bells, makes extreme use of letting strings ring (ex. :40), and suggests what a defender of the viol against the violin’s rising popularity meant by its ability to reconcile voice & resonance

Track 16 From a violin sonata by Michele Mascitti (1706), illustrates continuous “voice” sound of violin but played on viola da gamba would illustrate a “sparkling sound” combining melodic voice w/ harmony of resonance (according to a viol defender in 1740)

Track 17 This François Couperin piece for harpsichord features a technique of delaying a melody note to create the effect of a crescendo (an illusion of starting on time and growing louder). This was a means to “give soul to the instrument” and makes the harpsichord exemplary not of lifeless mechanism but of music entangling matter and mind. Effect occurs at :56, 1:02 and most notably 1:17.

Chapter 3 Analogies: Diderot’s Harpsichord & Oram’s Machine

Trk 18 “Four Aspects” by Daphne Oram (1960). Oram used to illustrate crescendo requiring more than just increased volume: “To get a real feeling of crescendo one has got to…put more & more high frequencies into the tone color as well.” Listen 2:16-3:37

Trk 19 “Contrasts Essconic” by Daphne Oram, performed @ First London Concert of Electronic Music by British Composers (1968). Its contrasts were in mood & sound sources, which included piano, tone generators, musique concrète & the debut of “Oramics” the “graphic sound” system Oram invented for hand-drawn control of electronic sound. Oramics most likely heard at 3:56-4:50 and 5:40-6:43.

The 1968 concert also featured Peter Zinovieff’s “Partita for Unattended Computer,” which got media attention as the “first concert performance ever by a computer.” Featured in this Tomorrow’s World episode – what became sound of “computer thinking” @ 3:40 https://www.bbc.co.uk/archive/peter-zinovieff–early-electronic-music/zmyf7nb

Excerpt from BBC radio program World at One (1972) in which Daphne Oram demonstrates her “method of translating visual wave patterns into musical sound, in a way that retains the human element” https://research.gold.ac.uk/id/eprint/26356/27/011_Oram_1972_Interview_Excerpt.mp3

Chapter 4 Personifications: Piano Death & Life

New York Times 2012 coverage of pianos going to the dump & responses to it start Chapter 4’s inquiry into personifying pianos:

Annea Lockwood, Piano Burning (1968) https://www.utne.com/arts/bonfire-of-the-ivories-visualize-your-piano-burning/

Chapter 5 Genres of Being Posthuman: Chopped & Pitched

Track 20 Calvin Harris and Rihanna, “This is What You Came For” (2016). The “yooo ooo ooo”s were the 2016 sound of “people don’t want to sound like people anymore” (according to one music critic)

Track 21 Kiiara, “Gold” (2016). Producer Felix Snow described “manipulating vocals to sound like a computer and vice versa” as “the first tiny step toward the computer doing all of the singing”; Kiiara observed, “it’s a sample. That’s all it is.”

Track 22 Zara Larsson and MNEK, “Never Forget You” (2016). In contrast to pitched and chopped lead vocals, processed vocals in instrumental textures ex. 1:07-1:33

Track 23 T-Pain featuring Ludacris, “Chopped N Skrewed” (2008). This 2001: A Space Odyssey parody really sums up autotune anxieties ca. 2008

Track 24 Cher, “Believe” (1998), the breakthrough hit for the sound of autotuned vocals frequently characterized as “robotic”

Track 25 Flume featuring kai, “Never Be Like You” (2016) exemplifies ca. 2016 EDM-pop’s taste for “feathery” female vocals, ex. :19 and 3:30

Track 26 MNEK, “At Night (I Think About You)” (2016), in which producer-singer MNEK manipulates his own voice with and without the computer, as he discusses in this interview esp. at 3:05 https://www.bbc.com/news/entertainment-arts-36744245

Coda: Learning Machines

Track 27 David Cope, “From Darkness, Light” (2010) illustrates one pole of AI in the socio-musical imaginary, with anthropomorphic name Emily Howell emphasizing humanlikeness and implying its generalization to autonomy, etc.

George Lewis and Vijay Iyer in Concert (2012) with Voyager, which they discuss @ 36:30, foregrounding both its need for help to function and capacity for disobedience (a sociomusical imaginary for AI between the extremes of autonomous or “just a tool”)

Track 28 Laetitia Sonami, “Breathing in Birds and Others” (2017) stands in for this artist by Sonami in 2013, where she demonstrated her bird with magnetic coils (47:40); this talk was my introduction to neural nets https://archive.org/details/sonami

Trks 29-30 are from Dadabots, Can’t Play Instruments, Vol 1 (2021), an album of neural network-generated hardcore punk that continued the duo’s front of humanless musical creation (while elsewhere databots discuss “using the neural nets as an instrument”)

Track 31 Holly Herndon, Jlin, Spawn, “Godmother,” PROTO (2019) lent itself to idea of AI acquiring sentience

Tracks 32-33 Jlin’s “Expand” (2015) & “Embryo” (2021). Listening to these tracks clarifies “Godfmother” as the sound of a model trained on Holly Herndon’s voice & style transfer to Jlin’s music

Jennifer Walshe and Memo Akten, Ultrachunk (2018). Walshe describes performing the neural network trained on her voice as working with an “alien intelligence”

Hexorcismos, Transfiguración (2020). Another example of alternative to likening/comparing AI to human, Hexorcismos describes “approaching the Neural Network as an alchemical technology,” which aptly characterizes the process of style transfer

Tracks 34-37 Arca, “Riquiquí” (2020) & from Riquiquí; Bronze-Instances(1-100) (2020), the album of its 100 remixes via AI – or in her term that steps outside the usual frame of human comparison to contemplate machine capabilities, “intelligent sentience”

Tools for Sound Thinking: On Music Tech Narratives

According to composer and journalist Kyle Gann, the musical note ceased to be the basic unit of musical composition sometime in the 1980s. In that decade, the advent of samplers (such as the Akai MPC60) made it possible to build pieces, rather than from notes, from any recorded sound complex. It’s a view Richard Taruskin recapitulates in his Oxford History of Western Music, which takes written notation as the enabling condition and unifying feature of Western art music, but ends with the “advent of postliteracy.” As Taruskin, citing Gann, argues, “the sampler frees composers from the habits inculcated by Western notation.”

Yet the 1980s witnessed the invention not only of digital samplers, but also of MIDI. MIDI – an acronym for Musical Instrument Digital Interface – was designed to enable synthesizers, regardless of manufacturer, to be linked together, primarily so that keyboard playing on one device could activate sound-generating capacities on another. Thanks to the cheapness of MIDI’s implementation, and the fact that its designers opted not to patent it, it was quickly adopted far and wide in digital music devices.

The result, according to technologist and musician Jaron Lanier, runs directly counter to the liberation narrative posited by Gann and Taruskin. “The whole of the human auditory experience has become filled with discrete notes that fit a grid,” Lanier writes. Rather than seeing digital music technologies emancipating musicians from the habits of Western notation, Lanier sees them delimiting and restricting the parameters of the note that notation had left fuzzy and flexible. “Before MIDI, a musical note was a bottomless idea that transcended absolute definition.…After MIDI, a musical note was no longer just an idea, but a rigid, mandatory structure you couldn’t avoid in the aspects of life that had gone digital.”

Did we become more firmly in thrall to notes than ever before, or more emancipated from their rule? Are our auditory worlds filled with “discrete notes that fit a grid,” or with rich “sound complexes”? Both Gann and Lanier posit a direct relationship between instrumental technologies and the very basis of musical construction. In their mirror-image narratives, Gann and Lanier thus agree on a fundamental. Stepping back, however, we can see that both take one tool as the basis for the whole musical world. (We might think of the cliché that when you have a hammer, everything looks like a nail.)

The issue here is not simply one of parts and wholes. Rather, there is a foundational issue having to do with narratives of technology in relation to musical thought and practice. Rather than think that any one tool becomes the whole musical world, we may find that there is a whole world in any musical tool.

Something went wrong. Please refresh the page and/or try again.