How do musicians communicate emotion? Performers have often answered this question in terms of what the performer should think and feel. According to the eighteenth-century keyboardist C. P. E. Bach, “a musician cannot move others unless he too is moved.” For nineteenth-century pianist Johann Nepomuk Hummel, emotion came from the performer’s “ability to grasp what the composer himself has felt, expressing it in his playing, and making it pass into the souls of the listener. This can be neither notated nor indicated.”

After hearing an unmoving performance of a Mozart piano concerto, Daniel Levitin decided to address the question scientifically. Levitin describes the experiment his lab conducted in this video:

Like much research in the psychology of music, this experiment seems to uncover a universal principle governing how music affects us – a principle summed up in the video’s title, “It’s all in the timing: how musicians communicate emotion.” But the experiment owes an unacknowledged debt to a revolution in piano performance that occurred at the start of the previous century; and it may well be contributing to another musical revolution currently underway.

Pianos in the Lab

all of the expressive nuance that comes from [the piano] comes from really just a definable set of parameters the pianist has under his or her control. The pianist can decide when to hit the key, when to let up the key, how hard to hit it, and then where to put the pedal; and everything that we hear, whether it’s tears of joy or tears of boredom, comes from these four decisions that the pianist makes.

Daniel Levitin, “It’s all in the timing” video

As Julia Kursell observes in a fascinating (highly recommended) article in the most recent issue of Grey Room (sorry non-subscribers, it’s $12), musical performance emerged as an object of scientific study around 1900, when for the first time physiologists and psychologists were able to record the fleeting processes of performance. Of course, you might think, that’s when the phonograph was becoming available. But it wasn’t the phonograph the early scientists of musical performance turned to. These scientists wanted to analyze not sound, but touch – the magical touch of the expert pianist. To cite just one expression of the typical view on piano performance from the period (which contrasts markedly with Levitin’s account of piano performance quoted above):

The artistically intelligent interpreter knows how to tint the sound of the piano in infinitely manifold ways, shading it or making it sparkle! All this happens through the changing manner of touch.

Zeitschrift für Instrumentbau, 1905

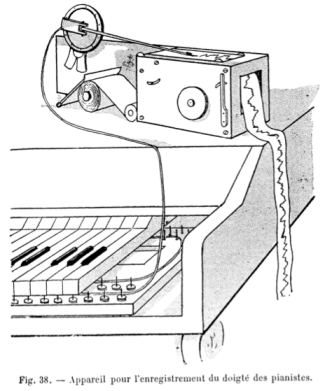

To analyze pianists’ touch, Parisian psychologists Alfred Binet and Jules Courtier developed an apparatus that registered the time and pressure at which the pianists pressed the keys, recording this information in the fashion of a seismograph:

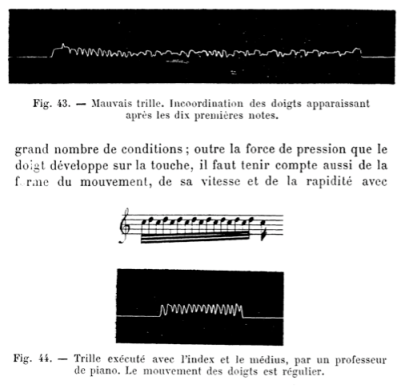

Binet and Courtier used their graphs to show that the best pianists had the greatest regularity in execution. Here, for example, they compare a “bad trill,” with squiggles all different shapes, to an expert’s trill, characterized by consistency and regularity:

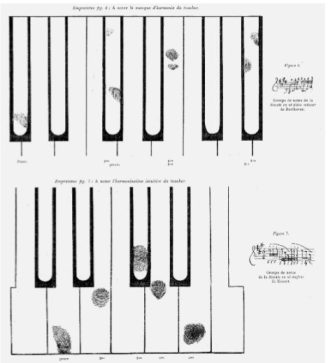

Around the same time (the 1890s), pianist-turned-research Marie Jaëll developed another method for register touch: covering the keyboard with strips of paper and coating the fingers with printing ink, she recorded the placement and quality of the fingers’ touch upon the keys. Here, she too compares bad with good touch:

For Jaëll – who had an illustrious career as a concert pianist – touch linked pianist and piano in a way that was absolutely essential for musical performance. She explained, “As soon as we get a beautiful sonority from the instrument, a link between our own organism and the instrument is established, and via this link, another link to music.” By analyzing fingerprints on the keyboard, Jaëll sought to determine the characteristics of musical touch.

Then, in the 1900s, the player piano hit the market. The player piano changed everything by introducing piano performance without keyboard touch. At first, piano rolls contained only metrically exact renditions of the notes of a musical score. Such performances were considered mechanical and soulless. To render them expressive, companies added levers that allowed a human performer to control the tempo and volume of the music, along with the sustaining pedal (see-hear a demo of such an instrument here – the performance at the end will surprise you!). In place of sensitive touch, these instruments decomposed piano performance into the three independent variables manipulated in the Levitin experiment – timing, dynamics, and pedaling.

Soon, timing, dynamics and pedaling too were automated with the piano rolls of a new type of instrument: the reproducing piano. Unlike the player piano, which played piano rolls generated straight from the score and had no mechanism for automated dynamics, the reproducing piano played piano rolls made from actual performances, complete with the performer’s temporal and dynamic nuances. The result was a new scientific instrument for the study of musical performance.

In 1928, an article appeared in the Journal of Applied Psychology entitled “A New Method of Analyzing Musical Style by Means of the Reproducing Piano.” By examining piano rolls, the author Guy Montrose Whipple could see how pianists executed each of the elements recorded by the reproducing piano, namely pitch, timing, dynamics (at 16 different gradations) and pedaling. As a result, it was obvious to Whipple that:

outside of difference in pedaling, the renditions of the same music by two pianists can differ in two respects and in these two respects only – namely, in respect to the temporal and in respect to the intensity values of each note played. If Paderewski has any magic touch, it has to be translated into duration and intensity…We have a picture set forth for us on the roll of the manner in which his effects are produced.

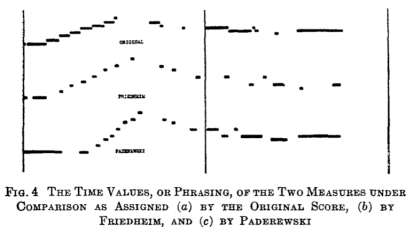

Whipple thus set about comparing performances. As a reference point, he used the music as it would have been programmed for the old player piano. This enabled Whipple to say with regard to the passage illustrated below, for example, that “the [first] note is played by Friedheimer practically as it is written, but Paderewski holds it as long as the next five notes combined.” Whipple observed such differences not to differentiate good from bad performances but rather to quantify pianists’ individual styles. As Kursell argues, individual style came to the fore as a performance virtue after the previous virtue of regularity was taken over by the soulless player piano.

The piano used in the Levitin lab is a sophisticated reproducing piano, which generates and reads not paper piano rolls but MIDI files that can be readily manipulated with MIDI editing software. This technology enabled the lab to do something that had never been done before: create “a continuum of emotional versions.”

“A Continuum of Emotional Versions”

To our knowledge, this is the first study to present a continuum of carefully controlled variations of music in the physical domain from which were obtained the corresponding responses in the psychological domain.

Anjali Bhatar et al., “Perception of Emotional Expression in Musical Performance,” Journal of Experimental Psychology

As Levitin explained in the above video, the musical performance study began with a performance by an expert musician; they then “edit[ed] out all of the expressive nuance that comes from variation in timing and loudness.” In the resulting research paper, they called the recording of the original human performance the “expressive” performance, and the performance from which they removed all temporal and dynamic deviations the “mechanical” performance. To produce gradations between the expressive and the mechanical versions, they added the variations in timing and loudness back in at 25%, 50% and 75%. The legacy of the player piano can be seen their decision to eliminate all dynamic variation from the mechanical version, even though dynamics are indicated in the score: the idea that the performer may manipulate dynamics at will but may not change any pitches derives from the player piano.

The antithesis posed between the expressive and the mechanical, however, harks back even further than the player piano, to the music-playing automata of eighteenth century. In response to Vaucanson’s flute-playing automaton that provided a major attraction in the 1740s, the court composer and flute virtuoso Johann Joacim Quantz wrote in 1752:

With skill a musical machine could be constructed that would play certain pieces with a quickness and exactitude so remarkable that no human being could equal it either with his fingers or with his tongue. Indeed it would excite astonishment, but it would never move you; and having heard it several times, and understood its construction, you would even cease to be astonished. Accordingly, those who wish to maintain their superiority over the machine, and wish to touch people, must play each piece with its proper fire.

Quantz’s claim proceeds from the same premise as C. P. E. Bach’s and Hummel’s statements on expression quoted at the outset of this post: to communicate emotion, the performer must himself feel or understand the emotion. In the nineteenth century, “playing like an automaton” became a typical barb against performers thought to play without feeling. The set up of the Levitin lab experiment reflects this thinking: the poor performer who failed to move Levitin with his rendition of a Mozart concerto appears in the experiment as the “mechanical version.”

Yet, the antithesis between expressive and mechanical – long supported by an impermeable boundary between feeling, sentient human and inanimate machine – entered the Levitin lab experiment only to be undone by the series of intermediary stages inserted in between. The study proceeded from the idea (and then confirms it through listeners’ rankings) that the mechanical is not unmoving but less moving – that human and machine are miscible and can be mixed in different proportions to create different degrees of emotional expression.

Hyperhuman Performance

The possibility of being moved by a machine that itself feels no emotion has historically been a source of anxiety and psychological disturbance. Exhibit A in this phenomenon is E. T. A. Hoffmann’s famous story “The Sandman,” in which a man falls in love with an automaton, mistaking it for a living woman. Not coincidentally, a musical performance by the automaton plays an important role in the man’s deception (the automaton’s song became the basis for an unforgettable number in Offenbach’s opera The Tales of Hoffmann).

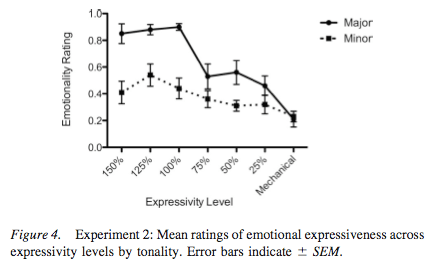

It may thus come as a relief that the Levitin lab found that hyperexpressive performances – performances at 125% and 150% of the original human performance – were not perceived as more emotional by listeners. As they put it in the research paper, “the amount of expressivity the performer chose to add to these pieces may well have been at the optimal level, beyond which extra expression (as implemented by increased variation in amplitude and timing) would not be perceived or preferred by the listener.)” When told of the research by The New York Times, Paul Simon said, “I find it fascinating that people recognize what the point of the original version is, that that’s their peak.”

Or is it?

The researchers tested the perception of “beyond expressive” performances with two music examples, taken from Chopin’s nocturnes Op. 32 No. 1 and Op. 55 No. With the first, the original performance was indeed rated highest in emotion. With the second, however, 125% received the highest rating, the results showing a fairly constant upward trajectory from the mechanical to 125%:

In the Journal of Experimental Psychology article, the researchers emphasize that their results on the perception of “beyond expressive” performances may not be generalizable from the examples used, and that the phenomenon requires further study. The popularity of their “optimal” human performance idea with the media, however, belies the underlying anxiety that a machine-made performance might be more emotionally moving than a human performance – the desire to believe, with Quantz, that the human performer will always maintain his superiority over the machine in this important respect.

The Levitin lab experiment thus opens the door to hyperexpressivity – a door many will not wish to pass through. By opening the door, however, the study may contribute to a shift away from the player piano model of musical performance – in which individuality and emotion are expressed through temporal and dynamic fluctuations – toward a model based on collaboration between human and instrument. In this, the study may move us toward the 21st-century version of those productive moments of contact Jaëll sought to capture with her fingerprints.

Listening: the recordings used in the Levitin lab experiment on musical performance